Asynchronous Rust

Asynchronous Rust programming is a disaster and a mess.

Well, I don’t really mean that. It can actually be rather pleasant, once you get to know it. Some aspects of the async APIs can make you marvel at the infinite genius of the people who designed them, while others make me want to yell the above sentence loudly to anyone who will listen. The truth is, async programming is hard in a language that isn’t really designed for it, and, as such, it feels rather unidiomatic.

Welcome to the Future

If you haven’t already, I recommend you go and read

the “Zero-cost futures in Rust” blog post. Basically, the upshot of it is

this: this all-new Future trait (a future = an object representing a

computation that “might not be ready yet”) solves everything, and it’s all

implemented (well, mostly) in one method poll(). What poll() does is ask “is

the future ready yet?”. If the future is ready - i.e. the asynchronous

computation has completed - it duly serves up whatever information it was

designed to output (the Future::Item type). If it isn’t, it returns

Async::NotReady and arranges (through some complex black magicactually

quite logical APIs) to be poll()ed when it’s next able to make progress. You

run futures in an event loop, which is responsible for performing all the

scheduling around figuring out when futures need to be polled.

So, if I needed to make an HTTP request over the network, I might have some function

fn make_request(url: &str, method: &str) -> HttpFuture { /* magic */ }which, as you can see, returns some sort of Future. When I call it, it would attempt

to make progress on sending the request out without blocking. It would make some progress,

but then realise it would have to block to continue, and, instead of blocking, it would

arrange for it to be polled when it can make more progress (using some operating system API)

and set things up with the event loop to make that all work. It would then duly report that

it was Async::NotReady, and hand control back to the event loop (which, presumably, would call it

in the first place) so that the event loop could make progress on other futures. Yay!

Given my incredibly pessimistic opening one-liner, you’re probably wondering

when the rants are going to start. Well, surprisingly, I think this part of the

futures crate is actually pretty well-designed - it helps abstract over a

complicated topic in an elegant (and composable) way that fits in with Rust’s

way of doing things. Composability is a HUGE plus - no matter what kind of

Future I’m dealing with, it’s easy for me to interact with them all in one

unified Future interface that works with everything else that speaks

Futures. Callbacks, for example, don’t really work that nicely this way - you

hand off a callback to some strange function, and you have no idea what sequence

of events will result in your callback being called. It’s also more annoying for

you to make a wrapper over a callback-based API - whereas with futures, it’s

simple.

So, Futures are the future, at least for now. But…

Future combinators

This is where things start to get a bit wobbly. Lemme quote a bit from that blog post again…

A major tenet of Rust is the ability to build zero-cost abstractions, and that leads to one additional goal for our async I/O story: ideally, an abstraction like futures should compile down to something equivalent to the state-machine-and-callback-juggling code we’re writing today (with no additional runtime overhead).

The way these zero-cost abstractions are implemented involves the use of

a veritable smorgasbord of wrapper structs that take some

Future and allow you to perform common operations on it, like map()ping over

its value or chaining another computation to the end of it.

By and large, this also makes sense. However, it is not without its faults.

They said Rust error messages with generics are shorter than those of C++…

The above Reddit post features a case where use of combinators has resulted in

an ugly, really long type that takes up half of my 11-inch screen. There’s

clearly some problem here: whether it’s the futures crate itself that’s at

fault for encouraging the use of lots of combinators with lots of pointy generic

type parameters, or the author for trying to use lots of combinators, or the

compiler for (reasonably) not being able to provide much help when something

goes wrong with the combinator salad. It’s composability gone wrong, in a way.

This combinator salad problem also means that it’s nigh-impossible to name the

return type of a complex Future-returning function (that is, without impl

Trait, a handy language extension created to solve this problem, or without

Box<>ing the hell out of everything, which sucks a whoole bunch)

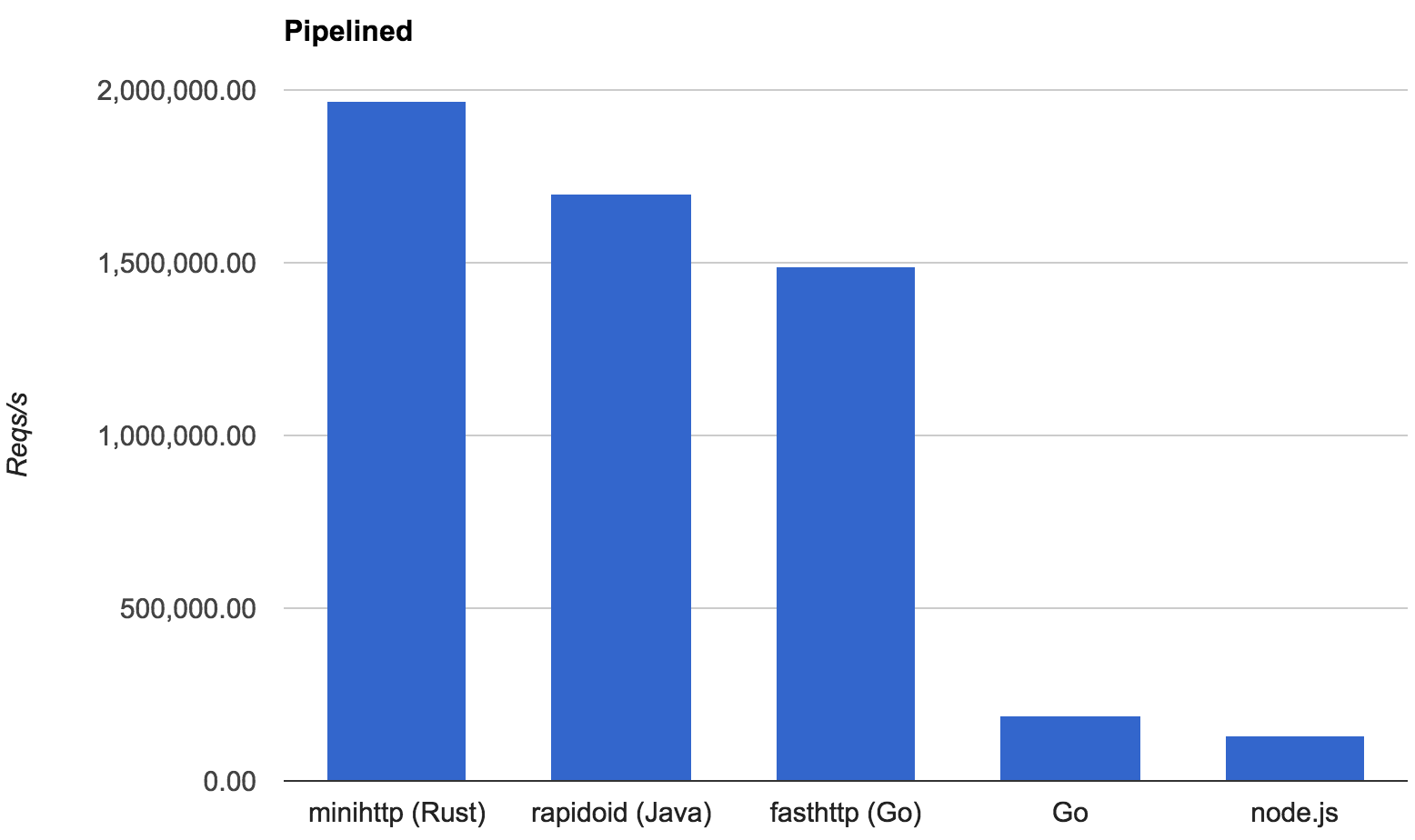

As for runtime performance, I think that the futures developers were probably

right when they said it was zero-cost. (Or not; more on that later.) The pretty graphs

they provide seem to imply that this is the case:

But what about the combinator salad problem?

enums. enums everywhere.

The futures blog post that I carry on referencing contained another interesting little quote:

When we do things like chain uses of and_then, not only are we not allocating, we are in fact building up a big

enumthat represents the state machine.

Lies! Go look at that long combinators type from earlier,

and notice the lack of anything remotely enum-like in that whole soup. When

you or I read that above sentence, we probably thought something along the lines

of “It’s an enum! I know this!”. Alas, it was not to be.

But what if we actually made a large enum? What if we designed a system that,

instead of creating type soup, simplified everything down into one big enum

per async function?

Let me write a bit of example futures code. (a lot of this is stolen from the

README of the futures crate itself, by the way.)

fn download(url: &str)

-> Box<Future<Item=File, Error=io::Error>> {

use std::io;

use std::net::{SocketAddr, TcpStream};

// First thing to do is we need to resolve our URL to an address. This

// will likely perform a DNS lookup which may take some time.

let addr = resolve(url);

// After we acquire the address, we next want to open up a TCP

// connection.

let tcp = addr.and_then(|addr| connect(&addr));

// After the TCP connection is established and ready to go, we're off to

// the races!

let data = tcp.and_then(|conn| download(conn));

// Alright, now let's parse our data (we're assuming it's some form of JSON or something)

let data = data.map(|data| parse::<File>(data));

// ok, return the future object

Box::new(data)

}Notice the use of a Box to hide the type soup we inadvertently created. Now, let me present

how I would like this code to actually work.

enum DownloadFuture {

Resolving(DnsFuture),

Connecting(TcpFuture),

Downloading(DownloadingFuture),

Complete

}

impl Future for DownloadFuture {

type Item = File;

type Error = io::Error;

fn poll(&mut self) -> Poll<Self::Item, Self::Error> {

use Self::*;

match mem::replace(self, Complete) {

Resolving(df) => {

if let Async::Ready(addr) = df.poll()? {

self = Connecting(connect(&addr));

}

else {

self = Resolving(df);

}

},

Connecting(tf) => {

if let Async::Ready(conn) = tf.poll()? {

self = Downloading(download(&conn));

}

else {

self = Connecting(tf);

}

},

Downloading(df) => {

if let Async::Ready(data) = df.poll()? {

let data = parse::<File>(data);

self = Complete;

return Ok(Async::Ready(data));

}

else {

self = Downloading(df)

}

},

Complete => {},

};

Ok(Async::NotReady)

}

}

fn download(url: &str) -> DownloadFuture {

DownloadFuture::Resolving(resolve(url))

}Okay, this version is longer. (A lot longer…) It also has some repetitive boilerplate that could be solved with judicious use of macros. However, it makes more sense:

- It actually is an

enum, so you can clearly see the different states the future can be in - If something goes wrong with it, it’s easy to find out where; there’s no “type soup” or maze of closures with opaque types..

- The futures involved are named, and not boxed - it’s easy to name all the types involved, so this actually is a zero-cost abstraction

- Error propagation is simple, and is discreetly handled by the

?operator, just like in regular functions. If, for example, I wanted myparse()function to return an error, it’s easy to modify theenum-based version, whereas I’d need to go and find out which bloody combinator I need to make the previous one work, and probably encounter a type soup-y error in the process of doing so. - You’ll probably end up writing code like this via macros. If that happens, and the macro goes wrong, you can simply expand the macro invocation to see what’s wrong.

So yeah, something to think about - maybe we don’t have to consign ourselves to the

futures combinator salad just yet. My point is, we should ideally consider alternatives

to the current implementation of futures before we rush to make all sorts of (slightly inefficient)

libraries based on it.

Oh yeah, slightly inefficient libraries.

Tokio

(This is where the rant really starts.)

I’m going to quote redditor /u/Quxxy, who expressed here how difficult to understand tokio is.

I’m currently trying to write an event loop built on futures.

It doesn’t help that every time I try to trace the execution of anything from tokio, it just bounces around back and forth through the layers of abstraction and multiple crates, to say nothing of the damn global variables that make it exponentially harder to tell what’s going on. It feels like there’s nothing in there that you can understand in isolation: there are no leaves to the abstraction tree, just a cyclic graph that keeps branching. I still haven’t worked out what task::park does. It doesn’t park anything! It clones a handle! No, wait, it clones an handle, and a Vec of events, but I’m not sure what those even do, and why does it just snapshot them? What happens if someone calls park twice? Does it explode? Does it collapse into a black hole? Does my computer turn into a reindeer and ride away on a rainbow? Is park only compatible with some of the waking methods? All of them? Do I have to use park for task_local! to work?

Okay, so some of this is about how Futures are supposed to actually communicate their readiness to the

event loop. From what I gather, this seems to work like this now:

- Your future gets

poll()ed by an event loop. This is important, because the event loop sets a couple of global variables that make the following pieces of black magic work. - You do an async computation that doesn’t complete.

- You now call

futures::task::current, which references one of those black magic global variables and produces aTask, which isSend + 'static + Clone. - You squirrel away your

Tasksomewhere (e.g. sending it to a thread that’s performing the blocking computation), and, when you’re ready to be polled again, callTask::notify()

But that’s beside the point; I actually think that bit makes sense, for the most part. We’re actually talking

about the tokio library itself, which does tend to be incredibly confusing.

To illustrate my point, let’s try and trace down how the hell a hyper HTTP server works.

- START: hyper docs

- Okay, so we want the

Serverstruct, for HTTP servers. -> hyper::server::Server - “An instance of a server created through Http::bind”. Oh, okay. What’s

Http? -> hyper::server::Http - “An instance of the HTTP protocol, and implementation of tokio-proto’s ServerProto trait.” Oh no, tokio’s making its appearance. Before we attempt to go further down the rabbit hole, however, I want to point out something mind-bendingly stupid: hyper::server::Http::bind.

fn bind<S, Bd>(&self, addr: &SocketAddr, new_service: S) -> Result<Server<S, Bd>>. Okay.where S: NewService<Request = Request, Response = Response<Bd>, Error = Error> + Send + Sync + 'static. Umm.- “Each connection will be processed with the new_service object provided as well, creating a new service per connection.”

A new service per connection?! How the heck does that make ANY sense? Why would that even be needed? Why would I want to clone my server object every time I get a request? How in the hells does this actually work performantly?!

Maybe I just don’t get it. Maybe we’re supposed to make 1 billion copies of

everything now, and this is just the way tokio works. Even so, this crazy

fact is just dropped in casually in the hyper docs, with no explanation for

why the hell this is the case.

Perhaps we’ll find an answer somewhere deep in tokio-proto land. Moving swiftly on…

- I think it’s time to dive into

ServerProto. scrolls to bottom, clicks trait name -> tokio_proto::streaming::pipeline::ServerProto - Oh wow, not one, not two, but SEVEN associated types. That’s okay, I bet they

are there for a reason. (Hah!)

Request(Body),Response(Body)andErrorlook vaguely acceptable, I guess. type Transport: Transport<Item = Frame<Self::Request, Self::RequestBody, Self::Error>, SinkItem = Frame<Self::Response, Self::ResponseBody, Self::Error>>Oh hello, type soup, my old friend. What’s aTransport?type BindTransport: IntoFuture<Item = Self::Transport, Error = Error>: “A future for initializing a transport from an I/O object.” Well, that makes some sense.- Ah, here we are, required methods.

fn bind_transport(&self, io: T) -> Self::BindTransport: Build a transport from the given I/O object, using self for any configuration.- Okay, so this entire thing seems to consume some unknown I/O object thing

Tand make aTransport. What’s aTransport? -> tokio_proto::streaming::pipeline::Transport pub trait Transport: 'static + Stream<Error = Error> + Sink<SinkError = Error>…Oh, it’s aSinkandStream. Except the actualItemof each remains to be discovered. Huh.- Maybe we should just check the crate-level documentation… -> tokio_proto

- Oh, I get it. How

tokio_protois supposed to work is: you define yourServerProto, which is essentially a way to wrap your raw I/O object (like a socket) in something like aFramed, which actually reads and writes and produces some niceRequestandResponseobjects. - Oh, but wait. There it is again, at the bottom of the README:

// Finally, we can actually host this service locally!

fn main() {

let addr = "0.0.0.0:12345".parse().unwrap();

TcpServer::new(IntProto, addr)

.serve(|| Ok(Doubler));

}Apart from the comment that clearly expresses the author’s frustration with the

9001 layers of abstraction hoops they had to jump through to just host a god-damn

server, this has another worrying aspect to it. It’s the clone-the-server-for-every-request-thing again: .serve(|| Ok(Doubler)). There’s a sneaky closure there

that implies you have to call some function for every request.

Let’s have a look at TcpServer then.

- -> tokio_proto::TcpServer “A builder for TCP servers.” Okay, what about

TcpServer::serve? fn serve<S>(&self, new_service: S) where S: NewService + Send + Sync + 'static, S::Instance: 'static, P::ServiceError: 'static, P::ServiceResponse: 'static, P::ServiceRequest: 'static, S::Request: From<P::ServiceRequest>, S::Response: Into<P::ServiceResponse>, S::Error: Into<P::ServiceError>. Golly gee, that’s a lot ofwhereclauses.- The important one is

S: NewService.NewServicehas one method,new_service(), which - you guessed it - makes a new service. - It doesn’t explain why.

Oh hang on…

impl<Kind, P> TcpServer<Kind, P> where P: BindServer<Kind, TcpStream> + Send + Sync + 'staticBindServerclicks -> tokio_proto::BindServer- “Binds a service to an I/O object.” Okay.

- Ooh, here we are,

bind_server: “This method should spawn a new task on the given event loop handle which provides the given service on the given I/O object.”

What’s a Service?

- Oh, here we go, another crate. -> tokio_service::Service

- “An asynchronous function from Request to a Response.”

- “The Service trait is a simplified interface making it easy to write network applications in a modular and reusable way, decoupled from the underlying protocol. It is one of Tokio’s fundamental abstractions.”

Oh, okay, then. I still don’t know why the tokio stack expects you to instantiate a new service for every connection.

Now that I’ve calmed down a bit, here’s why I think cloning the service object

for every connection is a bad idea™. You have this Service trait, right? It

makes Futures of some description from Requests of some description. This

makes sense; it’s like an asynchronous function that processes requests.

Of course, you can’t borrow from &mut self in your Future, because you might

want to handle more than one request at a time. The way most Rustaceans would

deal with this problem is to give their Future object some sort of Arc<Mutex<T>>,

and stuff some state in that. Job done. (You might also experiment with some sort

of interior mutability magic with &self and Cells, but I’m not sure how well

that would work with the futures ecosystem that seems to demand 'static everywhere.)

The practical upshot of this is: we don’t store state in the Service, because

it’s useless there. (Or rather: the Service is not the unique owner of state.)

It therefore makes no sense to clone our Service, which presumably just holds a bunch

of Arc<Mutex<T>>s, for every request - we’re just needlessly incrementing & decrementing

a refcount (and possibly incurring some synchronisation costs from the atomic operations).

We’re going to clone (parts of) our Service anyway, because we’re returning a Future

that needs to somehow store state. So why perform this needless extra clone? Just have

a Service, and maybe clone it once for each thread, if you like - but don’t clone

on every connection.

So, something clearly needs to be improved in the tokio ecosystem. Whether it’s documentation

on how the various pieces of abstraction line up, simplification of the pieces of abstraction

themselves, something else, or all of the above, the tokio crates seem to have an alienating

effect for those unfamiliar with their labyrinthine nature. I’m really still not convinced

that tokio-proto and friends are really zero-cost abstractions - partially because there

are so many layers of abstraction, but mostly because of the cloning thing.

Services II: Electric Boogaloo

I’m going to sketch out a design, like I did before, for how I’d like tokio’s Service

abstractions to work. Bear with me here.

pub trait ServiceFuture {

type State;

type Item;

type Error;

fn poll(&mut self, st: &mut Self::State) -> Poll<Self::Item, Self::Error>;

}

pub trait Service {

type Request;

type Response;

type Error;

type Future: ServiceFuture<State = Self, Item = Self::Response, Error = Self::Error>;

fn on_request(&mut self, request: Self::Request) -> Self::Future;

}We have a Service trait that looks rather like the one from tokio: it takes a Request,

and spits out a Future that will eventually produce either a Response or an Error.

However, the Future we return is not just a normal Future: it’s a ServiceFuture!

This is a special kind of Future that, when polled, gets exclusive access to the State

object that created it. Essentially, this moves the ownership of the service state back into

one Service object - which is exactly what you would have under the traditional synchronous

way of doing things (fn server(req: Request) -> Result<Response, Error>), just async this time.

Obviously, this has some pitfalls - notably, it’s single-threaded only - but it may be

worth considering as an alternate server proposition.

Peroration

This blog post has been, essentially, a catalog of my frustrations with the async Rust ecosystem

as a beginner in the field, and some naïve ideas for how it could be made more sensible. I am

very aware that the people designing the tokio and futures crates are admirable Rust contributors

with years more experience than I, and so probably have some justification for why things are designed

the way they are. I don’t want to annoy people; I just want to try and provoke some discussion

as to the design and implementation of async Rust.

Of course, as Linus Torvalds once said, “Talk is cheap. Show me the code.”. I don’t have any code yet…but I will soon. Check back some time after hell freezes over for Asynchronous Rust, part II: Now Featuring Actual Implementations!.

Syndications

This blog post has been syndicated to the following places (feel free to leave a comment there!):

(eta)

(eta)